"Beam Me Up, Scotty"

Migrating into an Evergreen Consortium

Derek C. Zoladz, OhioNET

Project Coordinator, Consortium of Ohio Libraries

Today's Agenda

Hover over the MIGRATION PROCESS

WARP to a distress call from an unknown species

RESCUE A COLONY from a dying planet

Set a course for FURTHER EXPLORATION

From a distance...

All migrations are the sameQUESTION GENERATION

Am I going to need to learn new skills?

How long is this going to take to complete?

Is this an opportunity for a shelving reorganization?

Should we standardize barcodes for patrons and items?

Will we need to modify library policies or maintain our current policies?

Are there tools available to speed up the process?

Will I need to learn to catalog in a different way?

Do we have the skills internally?

Will our use of MARC fields need to change?

Should we migrate all of our patron records?

Do we *really* need to weed our collection?

What in the world is a circulation modifier?

What legacy functionality will we need to furnish elsewhere?

Should we migrate or refresh our authority records?

Is that item *really* coming back?

Where do I go for help when I need it?

How much time should I allocate to each task?

Is this an opportunity for patron fine forgiveness?

What criteria should I use to weed patron records?

What new functionality will be able to take advantage of?

Are the staff onboard?

Am I ready to make the switch?

Planning

Write down any steps in the migration.

Break those steps down into smallish tasks.

Order steps. Work backwards from launch date.

Assign responsibility for each task or deliverable.

Get to it and check in weekly or so.

Data Extraction

DIRECT DB: psql, PgAdmin, Sequel Pro, PHPmyAdmin

ILS FUNCTIONS: Export data, report generation

THIRD PARTY: III exit services, Equinox

Explore data. Identify procedures. Formalize.

Data Manipulation

OMG: Lots of opportunity for improvement!

SCRIPTING: PERL, Python, MarcEdit .task

CLONE ME: ILS-specific tools, file conversions, .py .pl .sql

git clone git://git.esilibrary.com/migration-tools.gitData Ingestion

Evergreen: PostgreSQL, OpenSRF, XML, XMPP, PERL

For anything more than a few 1000 records, you'll need to load from the command line.

Feedback/Testing Phase

Recapitulation

as .gifData Extraction

Data Manipulation

Data Ingestion

ALERT =>

obligatory code slides ahead

Warp to Distress Call

DATA: Captain, I am detecting a transmission emanating from within the system. RIKER: What sort of signal? DATA: It is self-repeating, of unknown pattern. PICARD: Where is it coming from?... RIKER: It could be a distress call. Helm, take us into transport range.

Send request for DNA

Data ExportSpecies Incompatible

DATA MAPPING: INLEX, MBLOCK, GENDER

DATA FORMATTING: Timestamps, Types, Encoding

DISARTICULATING DATA: Full Name, ## on phone numbers

GENERATE DATA: Juvenile flag from DOB, for instance

pymarc and csv module

import csv

infile = open('/home/scotty/colony_in.csv', "r")

reader = csv.reader(infile)

outfile = open('/home/scotty/colony_out.csv', "w",

encoding='utf-8')

writer = csv.writer(outfile,

delimiter=',',

quoting=csv.QUOTE_MINIMAL)

for row in reader:

writer.writerow(row)

infile.close()

outfile.close()

Rescue Mission

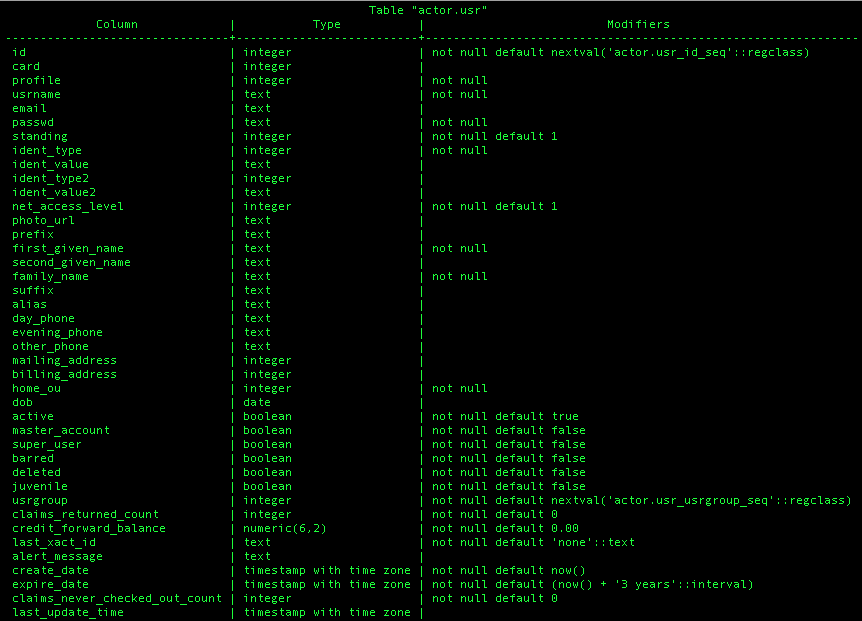

Data IngestionThree crucial tables in the Actor schema

actor.usr -- core User object

actor.card -- library user barcodes

actor.org_unit -- bring in their libraries

Prepare Colony for Transport

CREATE TABLE s_colony (

barcode text,

last_name text,

first_name text,

email text,

phone text,

profile int DEFAULT 2,

ident_type int DEFAULT 3,

home_ou int,

claims_returned_count int DEFAULT 0,

usrname text,

passwrd text

);

Activate Transporter

COPY s_colony (

barcode,

last_name,

first_name,

email,

phone,

home_ou,

usrname,

passwrd)

FROM '/home/scotty/colony_out.csv'

WITH CSV HEADER;

Bring 'um Onboard

INSERT INTO actor.usr (profile,

usrname,

email,

first_given_name,

family_name,

day_phone,

home_ou,

passwd,

ident_type)

SELECT profile,

s_colony.usrname,

email,

first_name,

last_name,

phone,

home_ou,

passwrd,

ident_type

FROM s_colony;

Celebrate!

SET A COURSE FOR FURTHER EXPLORATION

- Setting up a Test Instance

- Other Presentations: Staging Tables & Dedupe

- Tools